11. The Dirichlet Green’s Function as an Inverse of : Basis States

Michael Fowler, University of Virginia

Definition

Recall the Dirichlet Green’s function is defined to be the potential from a point unit charge at in a volume bounded by grounded conducting surfaces

with for on in (following Jackson (1.31), we're leaving the out of our definition, it just clutters up the math and can be restored at the end.)

Point Charge in Empty Space

Let’s start with the simplest case of no boundaries at all (but our function going to zero at infinity): a point charge in empty space.

As we discussed in the Math Bootcamp lectures, the delta function is essentially the continuum equivalent of the identity. Loosely speaking, then, the operator operating on gives unity, suggesting that is an inverse of the differential operator (apart from sign).

So how do we solve the equation? As we discussed in the Bootcamp, differential operators are expressed very simply in the Fourier transform space, in particular and in empty space is a function only of not separately, so, Fourier transforming the differential equation we find

We’ve already discussed the Fourier transform of the function on the right, going back into configuration space we get:

Of course, we knew this already: its just a point charge, so are we just going around in circles?

But this approach suggests how we can handle the much more complicated case where there are boundaries.

Analyzing and Generalizing the Method

We solved the problem by going to the Fourier transform space: that is, expressing both sides as integrals over plane waves.

Nowthe crucial pointthese plane wave states are the eigenstates of the differential operator in empty space!

That is to say, we have transformed to a representation in which is diagonal, and it’s pretty easy to find the inverse of a diagonal operator: it’s another diagonal operator, with each diagonal term replaced by its inverse (see the paragraph below if you’re a bit rusty on this).

We can use this same strategy in a space with boundaries and boundary conditions: we need to find the eigenstates of in this space satisfying the given boundary conditions, then express as a matrix between these eigenstatesit will be diagonal, of course, and so invertible, giving us The hard part in practice is finding the eigenstates.

Math Reminder: How to Invert an Operator Using the Eigenvectors

You can skip this if it looks familiar from quantum, or some other course.

To understand the inverse of an operator, we'll begin with a simple set of operators, matrices operating on vectors in a finite-dimensional vector space. We'll denote the vectors in the space using the ket notation of Dirac, in the present case just a column vector with elements. An operator here is an matrix (and if we're doing quantum mechanics, it's Hermitian). How do we find the inverse of such an operator?

The procedure is to find its eigenvectors the vectors it leaves invariant in direction:

We'll assume there are such vectors, they're linearly independent and span the space. (This is usually the case for physical operators, we can deal with exceptions later.) Any vector in the space can therefore be written as (dummy suffix notation, repeated suffix implies summation) and using this basis the matrix operator is just diagonal, having elements

The unit matrix, the one that operating on a vector leaves it completely unchanged, is of course the diagonal 1,1,…,1.

So the inverse of our operator in this basis is just

Writing the Operator Using Bras and Kets

There's a neater way to put all this, a way that generalizes to the cases we're interested in. We've used the ket vector notation to denote a column vector. Dirac invented this notation, and an component row vector he called a bra, and wrote it The point is that the inner product (with the standard definition of matrix multiplication) of these two vectors is a "bracket", bra-ket, just a number.

If our basis set of vectors are all normalized and orthogonal (the standard approach), then

What about the outer product ? This is a column vector multiplied by a row vector, so, with the standard definition of matrix multiplication (the element of the matrix product is the inner product of the th row of the first matrix with the th column of the second), it is an matrix.

What does it look like? Consider It has 1 in the 11 position, all the other elements are zero.

Now we see that the matrix

With the orthogonality and normalization of the basis set, this representation certainly gives the right answer on all the basis vectors, so by linearity it gives the correct answer for any other vector in the space.

Evidently the inverse is

Inverting a Differential Operator with Boundary Conditions: a One-Dimensional Example

Now for the more ambitious goal of finding the inverse of the operator subject to boundary conditions.

The key is to restrict the space of functions on which the operator operates to those satisfying the boundary conditions. That means we must find a basis set of eigenfunctions of the operator in this subspace. (Any solution of our problem is of course in this subspace.)

As a warm-up, to get the idea, we'll begin with the simplest possible model that has the right ingredients: the one-dimensional operator acting on the space of (twice differentiable!) functions with in the interval and at those boundary points. (Actually, there’s a simpler way to solve this simple system, just direct integration, as we’ll discuss shortlybut here we want to show the general method. Both approaches are necessary for many problems.)

Following the strategy laid out for the matrix above, we first find the eigenfunctions for this operator, subject to the given boundary conditions.

The normalized eigenfunctions are

These are real functions, and the bracket of two of them is

Writing This in Terms of Dirac Localized Kets

This can be written more neatly, purely in terms of bras and kets, by bringing in localized kets, following Dirac, delta functions labeled by position,

but this is pretty sloppyit’s only meaningful inside an integral, the delta function is not a normalizable wave function in the usual way (you can check this by defining it as a limit of some series of functions, say equal to zero for and equal to for The norm diverges.)

Anyway, the expression is defined by

Dirac defined “normalization” of these local kets by

the continuum analog of and, as we’ll see, this leads to a consistent formalism.

So the unit matrix, which can be written in terms of our set of basis functions as

can equally be written

(OK, this is mathematically pretty dubiousit’s not even a countably infinite basis! But if we’re careful, it is well-defined when the localized bras and kets appear in brackets in an expression, in the present case with our basis functions, or in integrals, the same thing.)

To check for consistency:

and

(So defining the position ket in this way puts it in our subspace of functions that are zero at the boundaries.)

Hence:

So the function is equivalent to the “unit matrix” in the space of continuous functions defined in the unit interval and equal to zero at the boundaries.

We're finally ready to find the Green's function for the differential operator in this space.

The differential operator is of course diagonal in the basis (they're its eigenstates) with eigenvalues

That is, it can be written

(so that ) .

The unit matrix

It follows that the Dirichlet Green's function, the inverse of the differential operator, must be

Exercise: Check that this is indeed the solution to the equation:

So we’ve formally solved the problembut it’s not exactly clear from this expression what the Green’s function looks like!

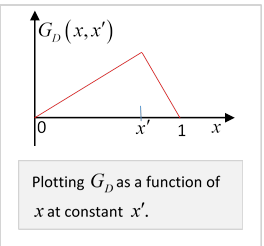

Picturing the Dirichlet Green’s Function

However, a little thought about the original differential equation makes it clear.

As a function of for given away from the point must be just a straight line: the solution of

goes to zero at the boundaries and has unit discontinuity in slope at (Differentiating a slope discontinuity gives a step discontinuity, the next differentiation gives the delta function.)

The neat way to write it is in terms of

In terms of these variables, the expression is simple:

Exercise: Convince yourself that this is correct, in particular the change in slope, and that it is symmetric in as a Green’s function must be.

For three-dimensional problems, we’ll often find it best to use the complete set of states representation for angle-type variables, the direct integration for radial variables.

Two Different Representations of the Green’s Function

It’s worth thinking a little more about the two different expressions for the Green’s function.

A:

B:

Expression A is a sum over all eigenstates of the differential operator, to be expected since it comes from integrating the delta function, which, being infinitely sharp, has contributions from all momentum/energy states. And, it’s difficult to visualize.

Expression B apparently involves only zero energy eigenstates of the operator: one satisfying the boundary condition, the other the boundary condition, and, in contrast to A, is very easy to see. But how can these two expressions be equivalent? The point is that B has a slope discontinuity, this generates the delta function when the differential operator is applied, and it means that if you take a Fourier transform of B as a function of for fixed there will be contributions from all energies.

These two representations are a recurring theme (especially in homework questions).

Physics

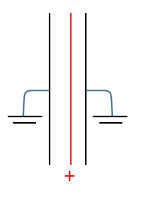

Does this one-dimensional model have any electrostatic realization? Yesit’s equivalent to an infinite uniform sheet of charge between two infinite parallel grounded conductors, which will have negative charge induced, to give linear potential drop from the charged sheet to the plates.

Exercise: for a non-central sheet of charge, what are the charges induced?

A mechanical one-dimensional realization would be a taut string pushed out of line by a sharp object to a V shape (in general with different length arms, of course). The two-dimensional generalization would be a rubber sheet attached to, say, the four sides of a square frame, pushed up at one point. Everywhere else the rubber would relax to the minimum energy, which is given by the displacement (in linear approximation) satisfying the two-dimensional

Point Charge Inside a Grounded Cubical Box: Induced Surface Charge

The obvious three-dimensional generalization of the one-dimensional delta function presented above to a 3D box with the six walls at is

Using the same argument as that above for the one-dimensional case, the three-dimensional Dirichlet Green’s function inside a cubical box with all walls at zero potential is:

It’s straightforward to check that

So what can we do with this Green’s function? Well, if we put a charge at any point inside a grounded conducting cubical box, this gives the potential at any other point and, in particular, by differentiating this expression in the normal direction at the walls, we can find the charge induced on the walls (remember they’re held at zero potential, meaning grounded, so charge will flow into these walls when the point charge is put inside the box).

For example, the surface charge density induced at the point on the top face is

Potential Inside a Cubical (Nonconducting) Box with Given Potential at Walls

Recall now one of the results of the Reciprocation Theorem: a point charge at inside a closed grounded container induces a surface charge density : from the theorem, if we have an identical empty container with specified potentials at the walls (so it’s not in general a connected conductor), the potential at the corresponding point inside

We can take five of the six walls to be at zero potential, then use linearity to add six such results.

Assume the potential is on the face at so and on the other faces. Then at any point inside the cube,

In the next lecture, we will analyze this same problem with a rather different approach, and find a different-looking (but of course equivalent) answer. In fact, the two approaches are equivalent to the two forms of the Green's function discussed earlier in this lecture for the simple one-dimensional system.

Exercise: Suppose the six faces of a cube are conductors, but insulated from each other. Suppose the top face is held at potential the others are grounded. Use the Reciprocation Theorem to prove the potential at the center of the cube is Does this approach also work for a dodecahedron?